Post summary: Tutorial how to setup code coverage with JaCoCo on unit tests to be done on each Maven build.

Code coverage

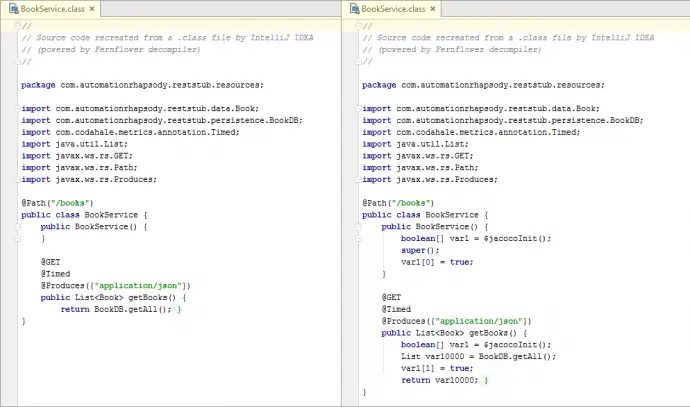

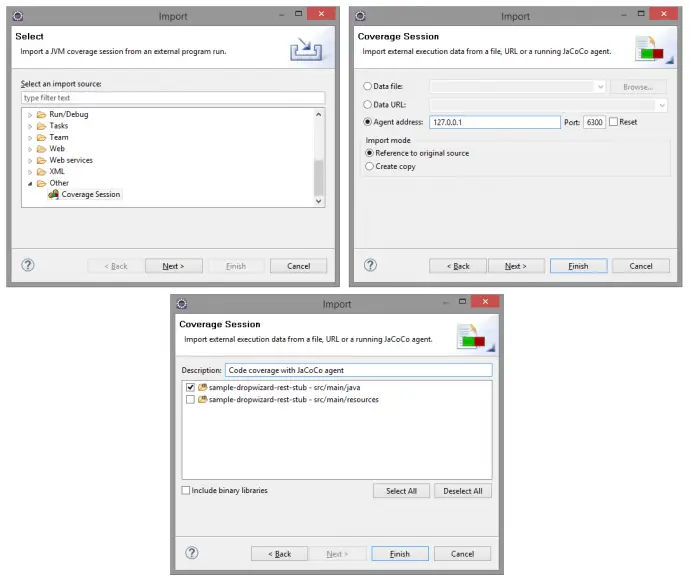

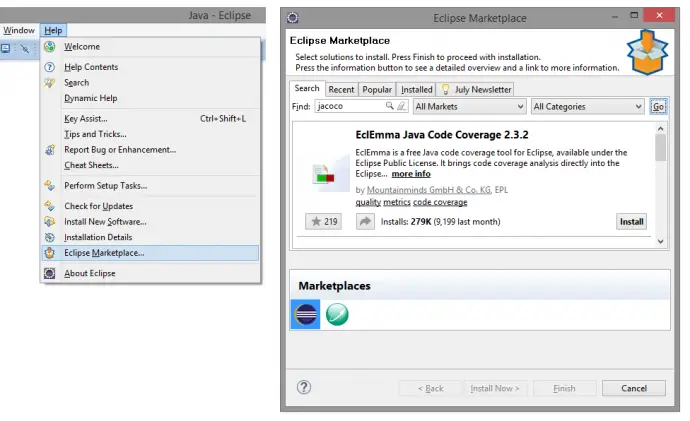

There are two main streamlines in code coverage. One is running code coverage on each build measuring unit tests coverage. Once configured this needs no manual intervention. Depending on the tools there is even an option to fail the build if code coverage doesn’t reach the desired threshold. Current post is designated to this aspect. Other is running code coverage on automated or even manual functional tests scenarios. Latter is described in Code coverage of manual or automated tests with JaCoCo post.

Unit tests code coverage

The theory described in What about code coverage post is applicable for unit tests code coverage, but the real benefit of unit tests code coverage is:

- Unit tests code coverage can be automated on every build

- The build can be configured to fail if a specific threshold is not met

JaCoCo for Maven

There is JaCoCo plugin that is used with Maven builds. More details and what goals can be accomplished with it can be seen in JaCoCo Maven plugin page. The very minimum to make it work is to setup prepare-agent and report goals. Report goal is good to be called during test Maven task. This is done with test instruction. You can define it on other tasks, like install or package, however, code coverage is done for tests. XML block to be added to pom.xml file is:

<plugin>

<groupId>org.jacoco</groupId>

<artifactId>jacoco-maven-plugin</artifactId>

<version>0.7.4.201502262128</version>

<executions>

<execution>

<id>jacoco-initialize</id>

<goals>

<goal>prepare-agent</goal>

</goals>

</execution>

<execution>

<id>jacoco-report</id>

<phase>test</phase>

<goals>

<goal>report</goal>

</goals>

</execution>

</executions>

</plugin>

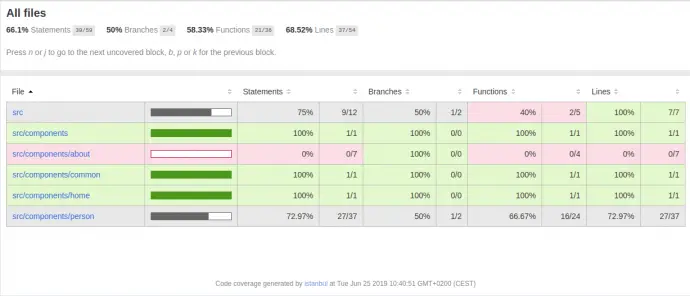

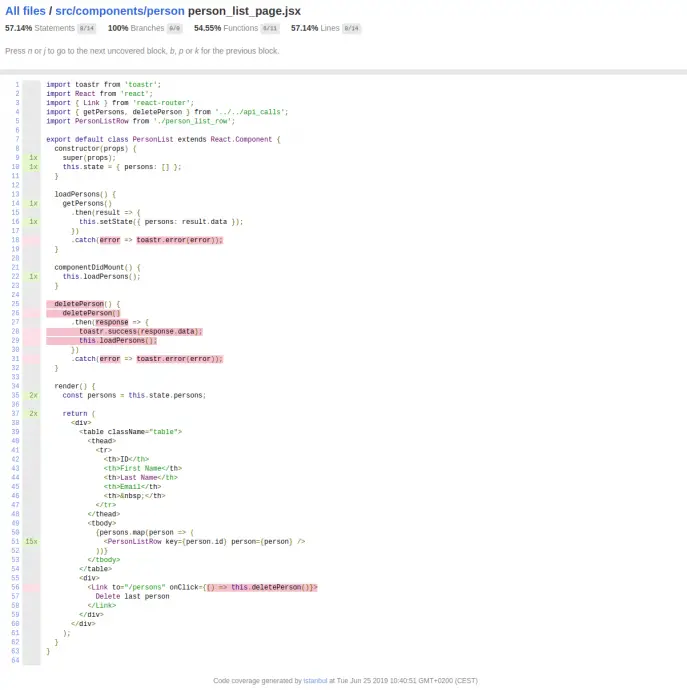

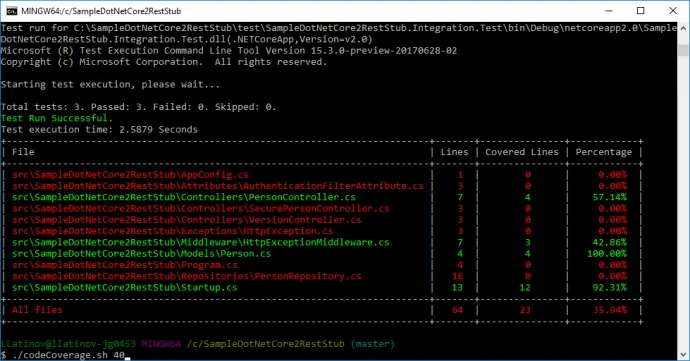

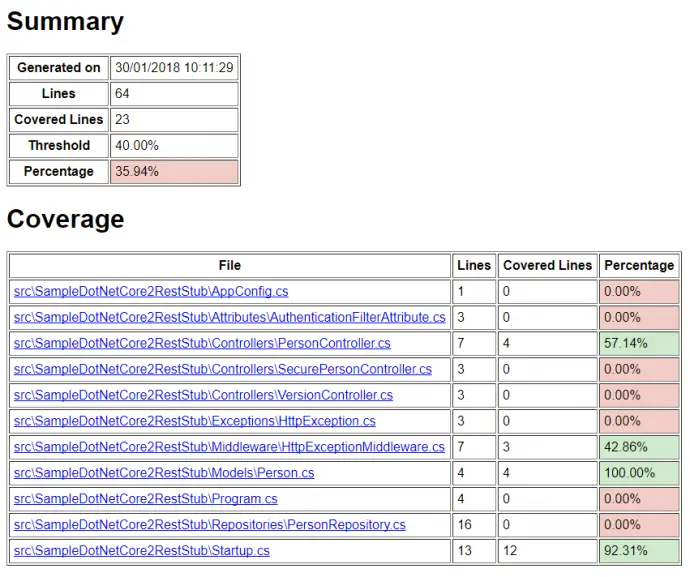

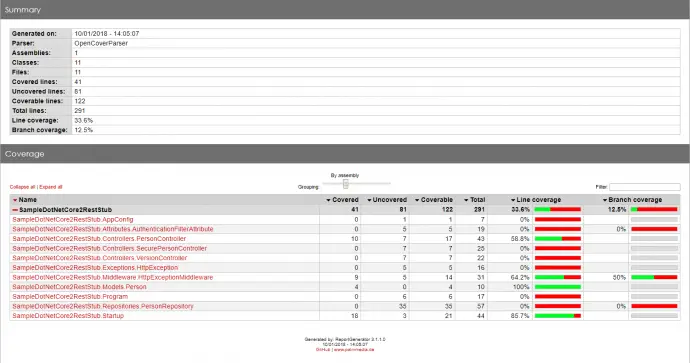

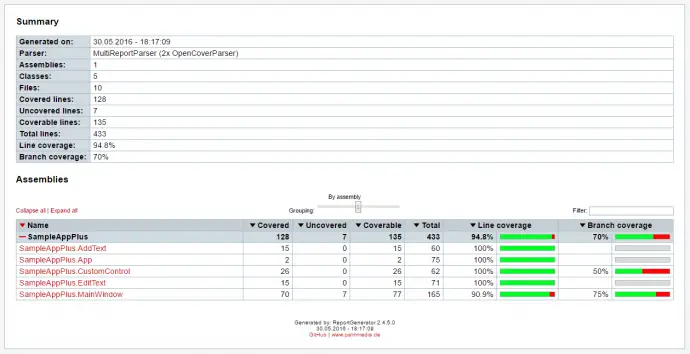

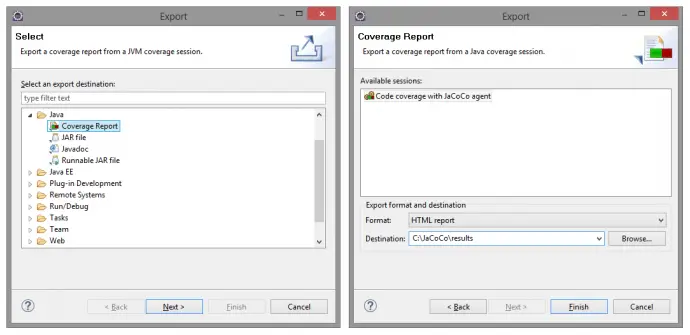

Once this is configured it can be run with mvn clean test command. Once successfully build JaCoCo report can be found in /target/site/jacoco/index.html file. A similar report can be found in HTML JaCoCo report.

Add coverage thresholds

Just adding unit tests code coverage report is good but can be rather improved. A good practice is to add code coverage thresholds. If predefined code coverage percentage is not reached build will fail. This practice could have a negative effect on putting unit tests just for the sake of code coverage, but then again it is up to the development team to keep the good quality. Adding thresholds is done with check Maven task:

<execution>

<id>jacoco-check</id>

<phase>test</phase>

<goals>

<goal>check</goal>

</goals>

<configuration>

<rules>

<rule implementation="org.jacoco.maven.RuleConfiguration">

<element>BUNDLE</element>

<limits>

<limit implementation="org.jacoco.report.check.Limit">

<counter>INSTRUCTION</counter>

<value>COVEREDRATIO</value>

<minimum>0.60</minimum>

</limit>

</limits>

</rule>

</rules>

</configuration>

</execution>

check task should be added along with prepare-agent. report is not mandatory but could also be added in order to inspect where code coverage is not enough. Note that implementation attribute is mandatory only for Maven 2. If Maven 3 is used then attributes can be removed.

JaCoCo check rules

In order check task to work is should be added at least one goal element as shown above. The rule is defined for a particular scope. Available scopes are: BUNDLE, PACKAGE, CLASS, SOURCEFILE or METHOD. More details can be found in JaCoCo check Maven task documentation. In current example rule is for BUNDLE, which means whole code under analysis. PACKAGE is the far I would go. Even with it, there could be unpleasant surprises like a utility package or POJO (data object without business logic inside) packages where objects do not have essential business logic but the PACKAGE rule will still require given code coverage for this package. This means you will have to write unit tests just to satisfy the coverage demand.

JaCoCo check limits

Although not mandatory each rule is good to have at least one limit element as shown above.

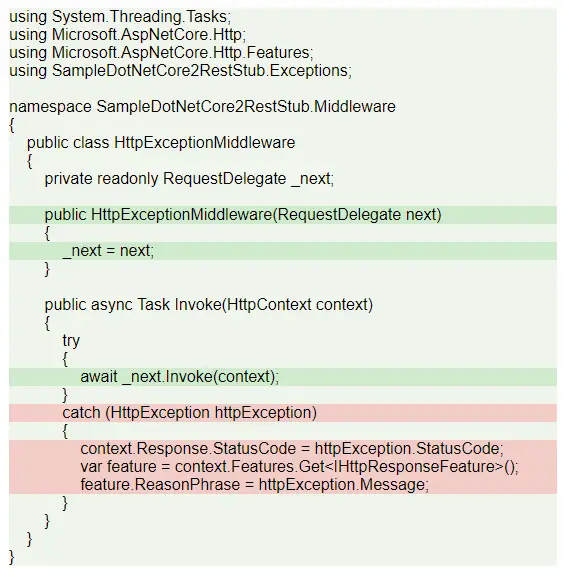

Available limit counters are: INSTRUCTION, LINE, BRANCH, COMPLEXITY, METHOD, CLASS. Those are the values measured in the report. Some of them are JaCoCo specific other are accordance with code coverage general theory. See more details on counters in JaCoCo counters page. Check sample report at HTML JaCoCo report to see how counters are displayed.

Available limit values are: TOTALCOUNT, COVEREDCOUNT, MISSEDCOUNT, COVEREDRATIO, MISSEDRATIO. Those are the magnitude values for measuring. COUNT values are bounding with current implementation and cannot be referred to some industry standards. This is why RATIO values are much more suitable.

Actual measuring value is set in minimum or maximum elements. Minimum is generally used with COVERED values, maximum with MISSED values. Note that in case of RATIO this should be a double value less than 1, e.g. 0.60 in the example above means 60%.

With given counters and values there could be lots of combinations. The very minimum is to use INSTRUCTION with COVEREDRATIO as shown in the example above. Still, if you want to be really precise several limits with different counters can be used. Below is an example of Maven 3 where defining that every class should have been covered in unit tests:

<limit>

<counter>CLASS</counter>

<value>MISSEDCOUNT</value>

<maximum>0</maximum>

</limit>

Configurations are good practice

Hard-coding is never a good practice anywhere. The example above has a hard-coded value of 60% instruction code coverage, otherwise build will fail. This is not a proper way to do it. The proper way is to define a property and then use it. Properties element is defined as a root level:

<properties>

<jacoco.percentage.instruction>0.60</jacoco.percentage.instruction>

</properties>

...

<limit>

<counter>INSTRUCTION</counter>

<value>COVEREDRATIO</value>

<minimum>${jacoco.percentage.instruction}</minimum>

</limit>

The benefit of a property is that it can be easily overridden at runtime, just specify the new value as a system property: mvn clean test -Djacoco.percentage.instruction=0.20. In this way, there could be a general value of 0.60 in pom.xml, but until this is not reached it can be overridden with 0.20.

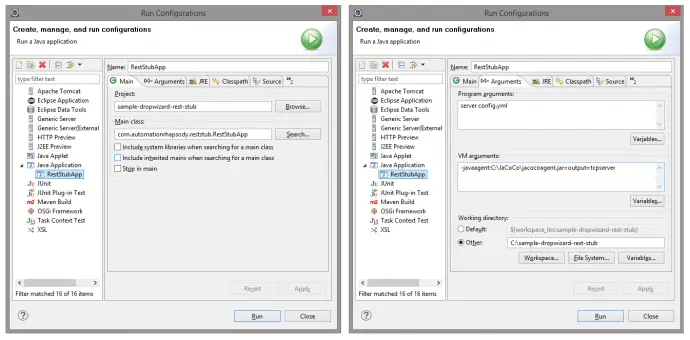

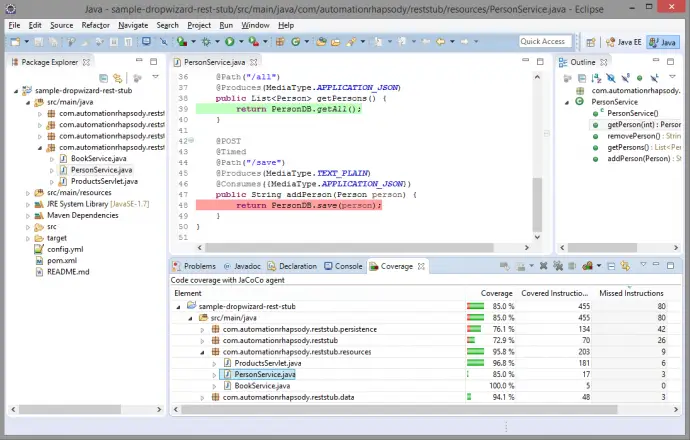

Experiment with JaCoCo checks

Sample application to experiment with is the one introduced in Build a RESTful stub server with Dropwizard post. It can be found in GitHub sample-dropwizard-rest-stub repository. It has a very low amount of code in it and just one unit test with very low code coverage. This gives good opportunities to try different combinations of counters and values to see how exactly JaCoCo works.

Conclusion

Code coverage of unit tests with thresholds is a good practice. This tutorial gives very good introduction how to implement and use them, so apply and use them.

Related Posts