Post summary: Practical code example how to use AWS Transcribe from an application with React frontend and .NET Core backend.

AWS Transcribe

Amazon Transcribe makes it easy for developers to add speech to text capabilities to their applications. Amazon Transcribe uses a deep learning process called automatic speech recognition (ASR) to convert speech to text quickly and accurately. Amazon Transcribe can be used to transcribe customer service calls, automate subtitling, and generate metadata for media assets to create a fully searchable archive. You can use Amazon Transcribe Medical to add medical speech to text capabilities to clinical documentation applications.

Real-time usage

Streaming Transcription utilizes HTTP 2’s implementation of bidirectional streams to handle streaming audio and transcripts between your application and the Amazon Transcribe service. Bidirectional streams allow your application to handle sending and receiving data at the same time, resulting in quicker, more reactive results. Read more along with a Java example in Amazon Transcribe now supports real-time transcriptions article. The way to achieve bidirectional communication in the browser is to use WebSocket.

How to use AWS Transcribe

The first thing that is needed is an AWS account with sufficient Transcribe privileges, read more in How Amazon Transcribe Works with IAM article. Once you have this, generate AWS AccessKey and SecretKey. With the AccessKey, SecretKey, and Region, a special pre-signed URL is generated, which is a rather complex process. A WebSocket connection is opened with this pre-signed URL. A full explanation of the process can be found in Using Amazon Transcribe Streaming with WebSockets article. Another very useful example of how to generate the pre-signed URL and open a WebSocket connection is shown in amazon-archives/amazon-transcribe-websocket-static GitHub repo.

Issues with generating pre-signed URL in the browser

So far so good, things are starting to make sense. In order to generate a pre-signed URL in the browser, AWS AccessKey and SecretKey are needed. One option is to make the users provide them every time they want to use the application, which is not really user friendly. Another option is to have the web application generate it automatically, which exposes the AWS credentials and is not really an option. The solution is to have a backend application, which can be even a Lambda function, to calculate the pre-signed URL.

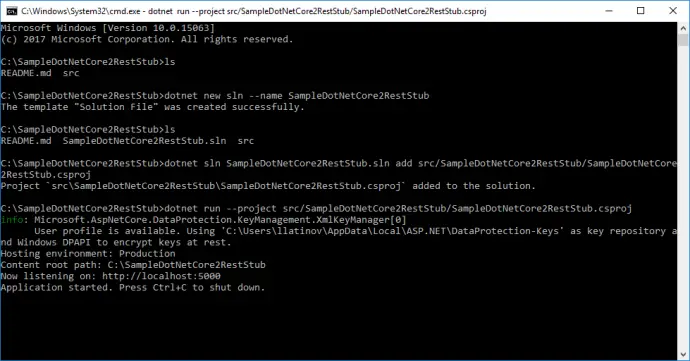

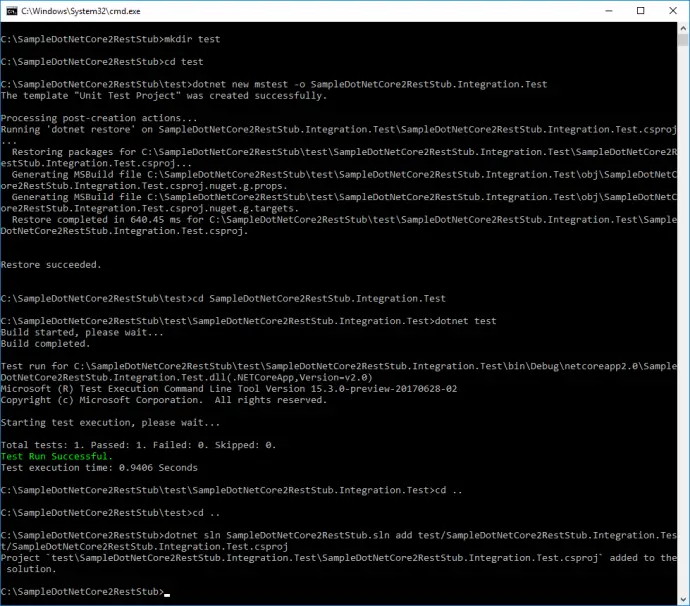

Generate the pre-signed URL in C#

Below is a .NET Core example controller code on how to generate the pre-signed URL in C#:

public class PresignedUrlController : ControllerBase

{

private const string Service = "transcribe";

private const string Path = "/stream-transcription-websocket";

private const string Scheme = "AWS4";

private const string Algorithm = "HMAC-SHA256";

private const string Terminator = "aws4_request";

private const string HmacSha256 = "HMACSHA256";

private readonly string _region;

private readonly string _awsAccessKey;

private readonly string _awsSecretKey;

public PresignedUrlController(IOptions<Config> options)

{

_region = options.Value.AWS_SPEECH_REGION;

_awsAccessKey = options.Value.AWS_SPEECH_ACCESS_KEY;

_awsSecretKey = options.Value.AWS_SPEECH_SECRET_KEY;

}

[HttpPost]

public ActionResult<string> GetPresignedUrl()

{

return GenerateUrl();

}

private string GenerateUrl()

{

var host = $"transcribestreaming.{_region}.amazonaws.com:8443";

var dateNow = DateTime.UtcNow;

var dateString = dateNow.ToString("yyyyMMdd");

var dateTimeString = dateNow.ToString("yyyyMMddTHHmmssZ");

var credentialScope = $"{dateString}/{_region}/{Service}/{Terminator}";

var query = GenerateQueryParams(dateTimeString, credentialScope);

var signature = GetSignature(host, dateString, dateTimeString, credentialScope);

return $"wss://{host}{Path}?{query}&X-Amz-Signature={signature}";

}

private string GenerateQueryParams(string dateTimeString, string credentialScope)

{

var credentials = $"{_awsAccessKey}/{credentialScope}";

var result = new Dictionary<string, string>

{

{"X-Amz-Algorithm", "AWS4-HMAC-SHA256"},

{"X-Amz-Credential", credentials},

{"X-Amz-Date", dateTimeString},

{"X-Amz-Expires", "30"},

{"X-Amz-SignedHeaders", "host"},

{"language-code", "en-US"},

{"media-encoding", "pcm"},

{"sample-rate", "44100"}

};

return string.Join("&", result.Select(x => $"{x.Key}={Uri.EscapeDataString(x.Value)}"));

}

private string GetSignature(string host, string dateString, string dateTimeString, string credentialScope)

{

var canonicalRequest = CanonicalizeRequest(Path, host, dateTimeString, credentialScope);

var canonicalRequestHashBytes = ComputeHash(canonicalRequest);

// construct the string to be signed

var stringToSign = new StringBuilder();

stringToSign.AppendFormat("{0}-{1}\n{2}\n{3}\n", Scheme, Algorithm, dateTimeString, credentialScope);

stringToSign.Append(ToHexString(canonicalRequestHashBytes, true));

var kha = KeyedHashAlgorithm.Create(HmacSha256);

kha.Key = DeriveSigningKey(HmacSha256, _awsSecretKey, _region, dateString, Service);

// compute the final signature for the request, place into the result and return to the

// user to be embedded in the request as needed

var signature = kha.ComputeHash(Encoding.UTF8.GetBytes(stringToSign.ToString()));

var signatureString = ToHexString(signature, true);

return signatureString;

}

private string CanonicalizeRequest(string path, string host, string dateTimeString, string credentialScope)

{

var canonicalRequest = new StringBuilder();

canonicalRequest.AppendFormat("{0}\n", "GET");

canonicalRequest.AppendFormat("{0}\n", path);

canonicalRequest.AppendFormat("{0}\n", GenerateQueryParams(dateTimeString, credentialScope));

canonicalRequest.AppendFormat("{0}\n", $"host:{host}");

canonicalRequest.AppendFormat("{0}\n", "");

canonicalRequest.AppendFormat("{0}\n", "host");

canonicalRequest.Append(ToHexString(ComputeHash(""), true));

return canonicalRequest.ToString();

}

private static string ToHexString(byte[] data, bool lowercase)

{

var sb = new StringBuilder();

for (var i = 0; i < data.Length; i++)

{

sb.Append(data[i].ToString(lowercase ? "x2" : "X2"));

}

return sb.ToString();

}

private static byte[] DeriveSigningKey(string algorithm, string awsSecretAccessKey, string region, string date, string service)

{

char[] ksecret = (Scheme + awsSecretAccessKey).ToCharArray();

byte[] hashDate = ComputeKeyedHash(algorithm, Encoding.UTF8.GetBytes(ksecret), Encoding.UTF8.GetBytes(date));

byte[] hashRegion = ComputeKeyedHash(algorithm, hashDate, Encoding.UTF8.GetBytes(region));

byte[] hashService = ComputeKeyedHash(algorithm, hashRegion, Encoding.UTF8.GetBytes(service));

return ComputeKeyedHash(algorithm, hashService, Encoding.UTF8.GetBytes(Terminator));

}

private static byte[] ComputeKeyedHash(string algorithm, byte[] key, byte[] data)

{

var kha = KeyedHashAlgorithm.Create(algorithm);

kha.Key = key;

return kha.ComputeHash(data);

}

private static byte[] ComputeHash(string data)

{

return HashAlgorithm.Create("SHA-256").ComputeHash(Encoding.UTF8.GetBytes(data));

}

}

Use the pre-signed URL in a React application

In one of the examples above, there was a raw code of how to open a WebSocket and use it. In the code below an example is given how to do the same in React with TypeScript. Note that there is a WebSocket closer configured to 15 seconds with setTimeout(). It is important to have some kind of a breaker because leave the socket open can generate a significant AWS bill.

import React from 'react';

import { EventStreamMarshaller, Message } from '@aws-sdk/eventstream-marshaller';

import { toUtf8, fromUtf8 } from '@aws-sdk/util-utf8-node';

import mic from 'microphone-stream';

import Axios from 'axios';

const sampleRate = 44100;

const eventStreamMarshaller = new EventStreamMarshaller(toUtf8, fromUtf8);

export default () => {

const [webSocket, setWebSocket] = React.useState<WebSocket>();

const [inputSampleRate, setInputSampleRate] = React.useState<number>();

const streamAudioToWebSocket = async (userMediaStream: any) => {

const micStream = new mic();

micStream.on('format', (data: any) => {

setInputSampleRate(data.sampleRate);

});

micStream.setStream(userMediaStream);

const url = await Axios.post<string>('http://localhost:3016/url');

//open up our WebSocket connection

const socket = new WebSocket(url.data);

socket.binaryType = 'arraybuffer';

socket.onopen = () => {

micStream.on('data', (rawAudioChunk: any) => {

// the audio stream is raw audio bytes. Transcribe expects PCM with additional metadata, encoded as binary

const binary = convertAudioToBinaryMessage(rawAudioChunk);

if (socket.readyState === socket.OPEN) {

socket.send(binary);

}

});

};

socket.onmessage = (message: MessageEvent) => {

const messageWrapper = eventStreamMarshaller.unmarshall(Buffer.from(message.data));

const messageBody = JSON.parse(String.fromCharCode.apply(String, messageWrapper.body as any));

if (messageWrapper.headers[':message-type'].value === 'event') {

handleEventStreamMessage(messageBody);

} else {

console.error(messageBody.Message);

stop(socket);

}

};

socket.onerror = () => {

stop(socket);

};

socket.onclose = () => {

micStream.stop();

};

setWebSocket(socket);

setTimeout(() => {

stop(socket);

}, 15000);

console.log('Amazon started');

};

const convertAudioToBinaryMessage = (audioChunk: any): any => {

const raw = mic.toRaw(audioChunk);

if (raw == null) return;

// downsample and convert the raw audio bytes to PCM

const downsampledBuffer = downsampleBuffer(raw, inputSampleRate, sampleRate);

const pcmEncodedBuffer = pcmEncode(downsampledBuffer);

// add the right JSON headers and structure to the message

const audioEventMessage = getAudioEventMessage(Buffer.from(pcmEncodedBuffer));

//convert the JSON object + headers into a binary event stream message

const binary = eventStreamMarshaller.marshall(audioEventMessage);

return binary;

};

const getAudioEventMessage = (buffer: Buffer): Message => {

// wrap the audio data in a JSON envelope

return {

headers: {

':message-type': {

type: 'string',

value: 'event',

},

':event-type': {

type: 'string',

value: 'AudioEvent',

},

},

body: buffer,

};

};

const pcmEncode = (input: any) => {

var offset = 0;

var buffer = new ArrayBuffer(input.length * 2);

var view = new DataView(buffer);

for (var i = 0; i < input.length; i++, offset += 2) {

var s = Math.max(-1, Math.min(1, input[i]));

view.setInt16(offset, s < 0 ? s * 0x8000 : s * 0x7fff, true);

}

return buffer;

};

const downsampleBuffer = (buffer: any, inputSampleRate: number = 44100, outputSampleRate: number = 16000) => {

if (outputSampleRate === inputSampleRate) {

return buffer;

}

var sampleRateRatio = inputSampleRate / outputSampleRate;

var newLength = Math.round(buffer.length / sampleRateRatio);

var result = new Float32Array(newLength);

var offsetResult = 0;

var offsetBuffer = 0;

while (offsetResult < result.length) {

var nextOffsetBuffer = Math.round((offsetResult + 1) * sampleRateRatio);

var accum = 0,

count = 0;

for (var i = offsetBuffer; i < nextOffsetBuffer && i < buffer.length; i++) {

accum += buffer[i];

count++;

}

result[offsetResult] = accum / count;

offsetResult++;

offsetBuffer = nextOffsetBuffer;

}

return result;

};

const handleEventStreamMessage = (messageJson: any) => {

const results = messageJson.Transcript.Results;

if (results.length > 0) {

if (results[0].Alternatives.length > 0) {

const transcript = decodeURIComponent(escape(results[0].Alternatives[0].Transcript));

// if this transcript segment is final, add it to the overall transcription

if (!results[0].IsPartial) {

const text = transcript.toLowerCase().replace('.', '').replace('?', '').replace('!', '');

console.log(text);

}

}

}

};

const start = () => {

// first we get the microphone input from the browser (as a promise)...

window.navigator.mediaDevices

.getUserMedia({

video: false,

audio: true,

})

// ...then we convert the mic stream to binary event stream messages when the promise resolves

.then(streamAudioToWebSocket)

.catch(() => {

console.error('Please check thet you microphose is working and try again.');

});

};

const stop = (socket: WebSocket) => {

if (socket) {

socket.close();

setWebSocket(undefined);

console.log('Amazon stoped');

}

};

return (

<div className="App">

<button onClick={() => (webSocket ? stop(webSocket) : start())}>{webSocket ? 'Stop' : 'Start'}</button>

</div>

);

};

TypeScript declaration

Module microphone-stream does not have a TypeScript package. In order to create one, a file named microphone-stream.d.ts with content declare module ‘microphone-stream’ is needed.

Conclusion

AWS Transcribe is really easy to use service, which does not require a significant implementation effort. I can compare it with Google Speech-To-Text and it is much harder to make that one work.